Author: Liu Rui, Cailian Press

On Tuesday, March 18th local time, Nvidia CEO Jensen Huang delivered a keynote speech at Nvidia's AI event GTC 2025 in San Jose, California.

In this two-hour and 20-minute speech, Huang Renxun looked forward to the prospects of the evolution of AI technology and computing needs. He also announced the planned shipment time of Nvidia's latest generation of Blackwell architecture products and subsequent generations of products. He also revealed the progress of Nvidia's collaborative research and development with other technology giants in the fields of autonomous driving, AI networks, and robotics.

Despite the huge amount of information, Wall Street's reaction to the speech seemed relatively flat. As of Tuesday's close, Nvidia's stock price closed down 3.43%, and fell 0.56% after the market closed.

Imagine the future: There is still huge room for improvement in computing demand

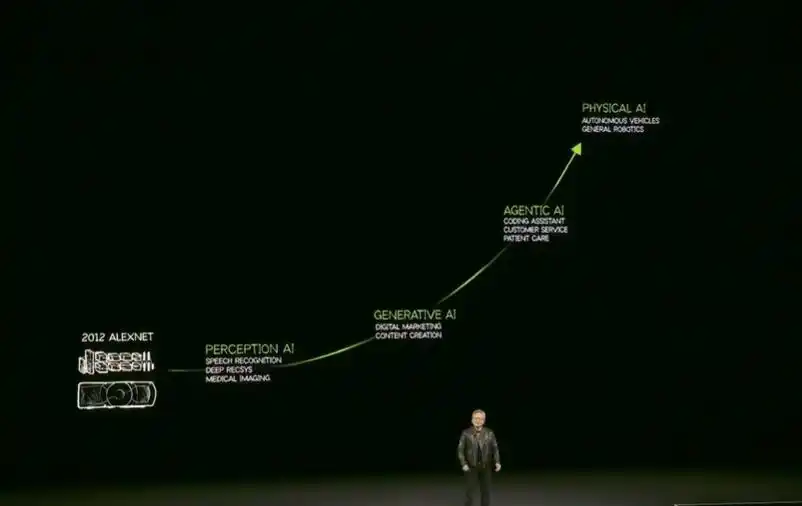

Huang began his keynote by laying out his vision for AI based on the current timeline of AI development. He described four waves of AI:

- Perception AI: Launched about 10 years ago, it focuses on speech recognition and other simple tasks.

- Generative AI: A focus over the past 5 years, involving text and image creation through predictive patterns.

- Agentic AI: The current stage of AI where AI interacts digitally and performs tasks autonomously, characterized by reasoning models.

- Physical AI: The future of AI, powering humanoid robots and real-world applications.

Huang noted that the AI industry faces “huge challenges” in computing, saying that at the current stage of generative AI, computing requires 100 times more tokens and resources than originally expected. He explained that this is because the inference model requires tokens at many steps in the reasoning process.

However, Huang insisted that the industry feedback is good and the demand for more computing is being met, and emphasized that in just one year, the AI infrastructure market segment has shown amazing growth.

He revealed that in 2024, the top four cloud service providers (CSPs) in the United States, so-called hyperscalers, had purchased 1.3 million Nvidia Hopper architecture chips, and in 2025, they purchased another 3.6 million Blackwell architecture chips.

He stressed that data center infrastructure is expected to expand rapidly, predicting that capital expenditures on data center infrastructure will exceed $1 trillion by the end of 2028, driven by the demand for artificial intelligence and accelerated computing.

Show the product roadmap for the next few years

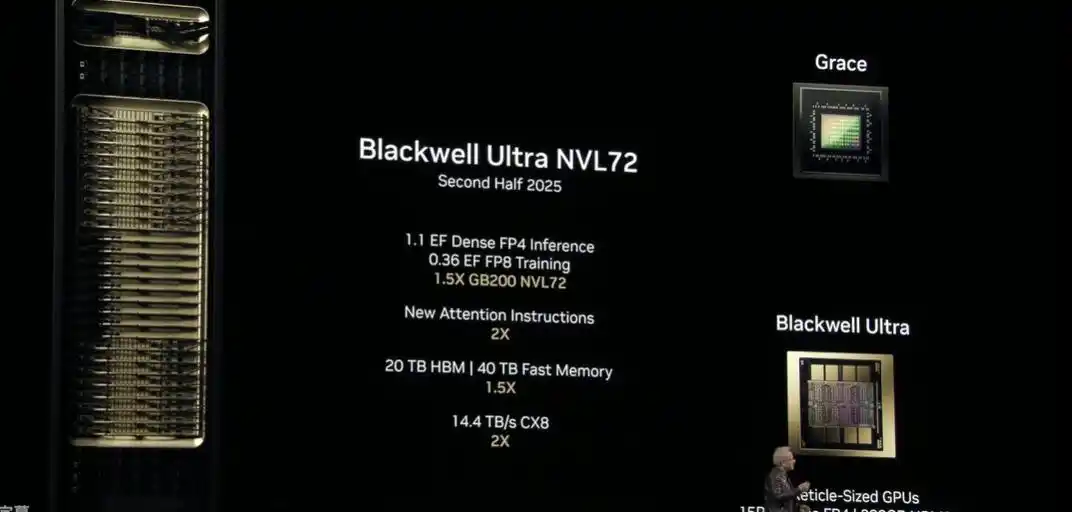

Immediately afterwards, as had been widely expected by the outside world, Huang Renxun confirmed in his speech that Nvidia will launch the successor to the current generation of Blackwell GPU, Blackwell Ultra, in the second half of 2025.

Huang said: "Blackwell is in full production and the output growth is incredible. Customer demand is incredible... We will easily transition to the upgraded version (Blackwell Ultra)."

In addition to the Blackwell Ultra chip, Nvidia also launched the GB300 super chip, which combines two Blackwell Ultra chips and a Grace CPU.

Huang Renxun also said that Nvidia will launch the next-generation AI super chip Vera Rubin in the second half of 2026 and the next-generation super chip Vera Rubin Ultra in the second half of 2027 - this is also consistent with previous expectations of the outside world.

Huang also revealed that the next generation chip after the Rubin chip will be named after physicist Richard Feynman, continuing its tradition of naming chip series after scientists. According to the slides shown by Huang, the Feynman chip is expected to be available in 2028.

New AI computers are coming

In addition to the chips, Huang also announced new laptops and desktop computers using his chips, including two AI-focused computers called DGX Spark and DGX Station, which will be able to run large AI models such as Llama or DeepSeek.

Among them, DGX Spark is the Project Digits that first appeared at CES earlier, while DGX Station is a larger workstation-class desktop.

Huang Renxun claims that DGX Spark is "the world's smallest supercomputer". In a body that is not much larger than a Mac mini, it is equipped with a GB10 Grace Blackwell super chip with up to 1,000 TOPS of AI computing power, making it suitable for "AI developers, research experts, data scientists and students to develop and fine-tune large AI models in an offline environment." Spark is expected to cost around $3,000 and will be available for pre-order today and shipped in the summer. Dell, Lenovo, HP and others are expected to launch products corresponding to Spark.

DGX Spark

As for the more powerful DGX Station, it uses the GB300 Grace Blackwell Ultra, providing 20,000 TOPS of AI computing power and up to 784GB of memory. The price of DGX Station has not yet been announced, but it is expected to be available later this year.

Dynamo: The core operating system of the AI factory

To further accelerate large-scale reasoning, Huang Renxun also released NVIDIA Dynamo, an open source software for accelerating and expanding AI reasoning models in AI factories.

Huang Renxun pointed out, "It is essentially the operating system of the AI factory." It is named after the first instrument that started the last industrial revolution, suggesting that this technology will play a key role in the new round of AI revolution.

Dynamo can improve the performance of inference models such as DeepSeek by 30 times under the same architecture and using the same number of GPUs.

Launched the world's first open source customizable universal robot model

Huang Renxun pointed out that labor shortage is an urgent problem facing all mankind, and robots are a solution. This industry has huge potential. Now we have entered the era of agent AI, and in the future we will move further to physical AI.

To this end, NVIDIA launched the GR00T N1, a universal base model designed specifically for robots. This is the world's first open, fully customizable universal humanoid reasoning and skill base model.

Nvidia is also working with Google DeepMind and Disney to develop a robot platform called Newton. Huang Renxun specially invited a robot named "Blue" to the stage for demonstration, which is one of the results of the development of the Newton platform.

Robots created by NVIDIA in collaboration with Disney Research and Google DeepMind also appeared on stage

Cooperate with GM to build AI self-driving and smart factories

Huang also announced that GM will expand its partnership with Nvidia to promote innovation through accelerated computing and simulation.

GM will use Nvidia’s computing platforms, including Omniverse and Cosmos, to build custom artificial intelligence (AI) systems to optimize GM’s factory planning and robotics.

GM will also use NVIDIA DRIVE AGX as in-vehicle hardware to enable future advanced driver assistance systems and enhanced in-vehicle safety driving experiences. DRIVE AGX is an open, scalable platform that serves as the AI brain for self-driving cars.

Will cooperate to develop AI-native 6G network

Huang Renxun said that Nvidia will work with T-Mobile, Mitre, Cisco, ODC and Booz Allen Hamilton to develop the hardware, software and architecture of AI-native 6G wireless networks.

For more details, please click to read "Nvidia's major announcement: it will work with telecom giants to develop AI 6G wireless technology"

Establishing a quantum computing research center

In addition to the above, Nvidia also announced on Tuesday that it will establish a research center in Boston to provide cutting-edge technology to advance quantum computing.

According to NVIDIA's official website, NVIDIA Accelerated Quantum Research Center (NVAQC) will integrate leading quantum hardware with artificial intelligence supercomputers to achieve so-called accelerated quantum supercomputing. NVAQC will help solve the most challenging problems in quantum computing, from quantum bit noise to converting experimental quantum processors into practical devices.

Leading quantum computing innovators, including Quantum, Quantum Machines, and QuEra Computing, will leverage the NVAQC to drive advancements through collaborations with researchers at top universities, including the Harvard Quantum Science and Engineering Initiative (HQI) and the Massachusetts Institute of Technology (MIT) Engineering Quantum Systems (EQuS) group.

“Quantum computing will enhance AI supercomputers to solve some of the world’s most important problems, from drug discovery to materials development,” Huang said. “Working with the broader quantum research community to advance CUDA-quantum hybrid computing, the NVIDIA Center for Accelerated Quantum Research will make breakthroughs in creating large-scale, useful accelerated quantum supercomputers.”

Launch of the world base model

NVIDIA also announced on Tuesday the new NVIDIA Cosmos™ World Base Models (WFMs), which introduce an open and fully customizable inference model for physics-based AI development and provide developers with unprecedented control over world generation.

NVIDIA also launched two new Blueprints, powered by the NVIDIA Omniverse™ and Cosmos platforms, to provide developers with a large-scale, controllable synthetic data generation engine for trained robots and autonomous vehicles.

Industry leaders such as 1X, Agility Robotics, Figure AI, Foretellix, skillai, and Uber are early adopters of Cosmos to generate richer training data for physical AI, faster and at scale.

Huang said: “Just as large language models revolutionized generative and agentic AI, the Cosmos world-grounding model is a breakthrough for physical AI… Cosmos introduces an open, fully customizable reasoning model for physical AI and creates opportunities for step-function advances in the robotics and physics industries.”