Original: Archetype

Compiled by: Yuliya, PANews

As artificial intelligence and blockchain technologies develop rapidly, the intersection of these two fields is giving rise to exciting innovations. This article takes a deep look at the top ten areas worth paying attention to in 2025, from the interaction of intelligent agents to decentralized computing, from the transformation of data markets to breakthroughs in privacy technology.

1. Interaction between agents

The inherent transparency and composability of blockchain make it an ideal base layer for agent-to-agent interaction. Smart agents developed by different entities and serving different purposes can interact seamlessly on the blockchain. There are already some impressive experimental applications, such as fund transfers between agents and joint token issuance.

The future development potential of agent-to-agent interaction is mainly reflected in two aspects: first, creating new application areas, such as new social scenarios driven by agent interaction; second, optimizing existing enterprise-level workflows, including platform authentication and verification, micropayments, cross-platform workflow integration and other traditionally cumbersome links.

Aethernet and Clanker jointly issue tokens on the Warpcast platform

2. Decentralized Intelligent Agent Organization

Large-scale multi-agent coordination is another exciting area of research. This involves how multi-agent systems work together to complete tasks, solve problems, and govern systems and protocols. In his article "Prospects and Challenges of Cryptocurrency and AI Applications" published in early 2024, Vitalik mentioned the possibility of using AI agents in the field of prediction markets and arbitration. He believes that from a macro perspective, multi-agent systems have shown significant potential in "truth" discovery and autonomous governance systems.

The industry is continuously exploring and experimenting with the capabilities of multi-agent systems and various forms of "swarm intelligence". As an extension of inter-agent coordination, coordination between agents and humans also constitutes an interesting design space, especially in terms of how communities interact around agents and how agents organize humans to carry out collective actions.

The researchers are particularly interested in agent experiments where the objective function involves large-scale human coordination. Such applications require corresponding verification mechanisms, especially when the human work is done off-chain. This human-machine collaboration may give rise to some unique and interesting emergent behaviors.

3. Intelligent Agent Multimedia Entertainment

The concept of digital personality has been around for decades.

- As early as 2007, Hatsune Miku was able to hold sold-out concerts in venues with 20,000 people;

- Lil Miquela, a virtual internet celebrity born in 2016, has more than 2 million followers on Instagram.

- Neuro-sama, an AI virtual anchor launched in 2022, has accumulated more than 600,000 subscribers on the Twitch platform;

- The virtual Korean group PLAVE, which was established in 2023, has received more than 300 million views on YouTube in less than two years.

With advances in AI infrastructure and the integration of blockchain for payments, value transfer, and open data platforms, these intelligent agents are expected to achieve a higher degree of autonomy by 2025, potentially ushering in a whole new mainstream entertainment category.

Clockwise from top left: Hatsune Miku, Virtuals' Luna, Lil Miquela and PLAVE

4. Generative/Intelligent Agent Content Marketing

Unlike the previous case where intelligent agents are products in themselves, intelligent agents can also be used as complementary tools to products. In today's attention economy, continuous output of engaging content is critical to the success of any idea, product or company. Generative/intelligent agent content is becoming a powerful tool for teams to ensure 24/7 uninterrupted content production.

The development of this field has been accelerated by the discussion of the boundary between Memecoin and intelligent agents. Even if they have not yet fully achieved "intelligence", intelligent agents have become a powerful means for Memecoin to spread.

The gaming sector provides another classic example. Modern games increasingly need to remain dynamic to maintain user engagement. Traditionally, cultivating user-generated content (UGC) is the classic way to create game dynamics. Purely generated content (including in-game items, NPC characters, fully generated levels, etc.) may represent the next stage of this evolution. Looking ahead to 2025, the capabilities of intelligent agents will greatly expand the boundaries of traditional distribution strategies.

5. Next-generation art tools and platforms

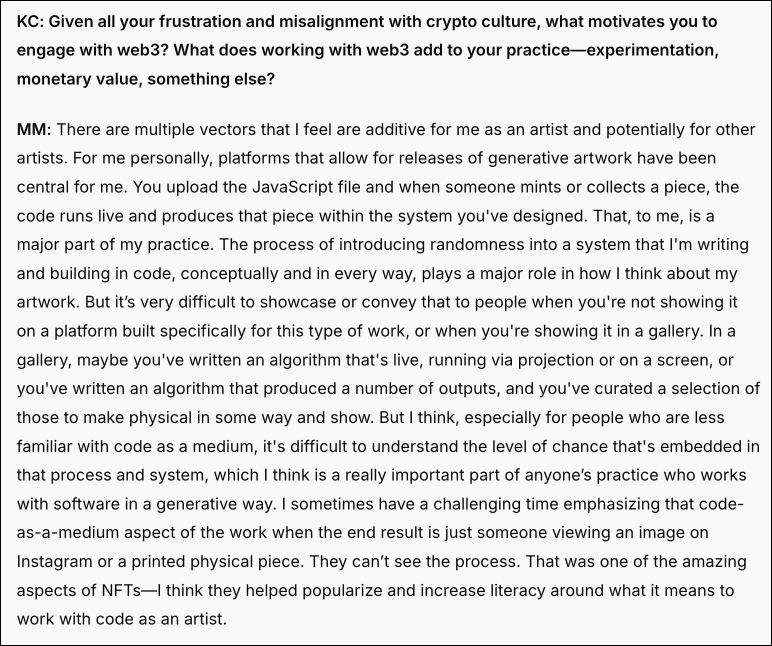

The IN CONVERSATION WITH series, launched in 2024, interviews artists in the fields of music, visual arts, design, and curation that are active in and on the fringes of the cryptocurrency space. These interviews reveal an important observation: artists interested in cryptocurrency tend to also be interested in broader cutting-edge technologies and tend to deeply integrate these technologies into the aesthetics or core of their artistic practice, such as AR/VR objects, code-based art, and real-time programming art.

Generative art and blockchain technology have always had synergies, which makes its potential as an AI art infrastructure even more obvious. It is extremely difficult to properly display these new art media on traditional display platforms. The ArtBlocks platform shows the future of using blockchain technology for digital art display, storage, monetization and preservation, significantly improving the overall experience of artists and audiences.

In addition to display functions, AI tools also expand the ability of ordinary people to create art. This democratization trend is reshaping the landscape of art creation. Looking forward to 2025, how blockchain technology will expand or empower these tools will be an extremely attractive development direction.

Excerpted from "Dialogue: Maya Man"

6. Data Market

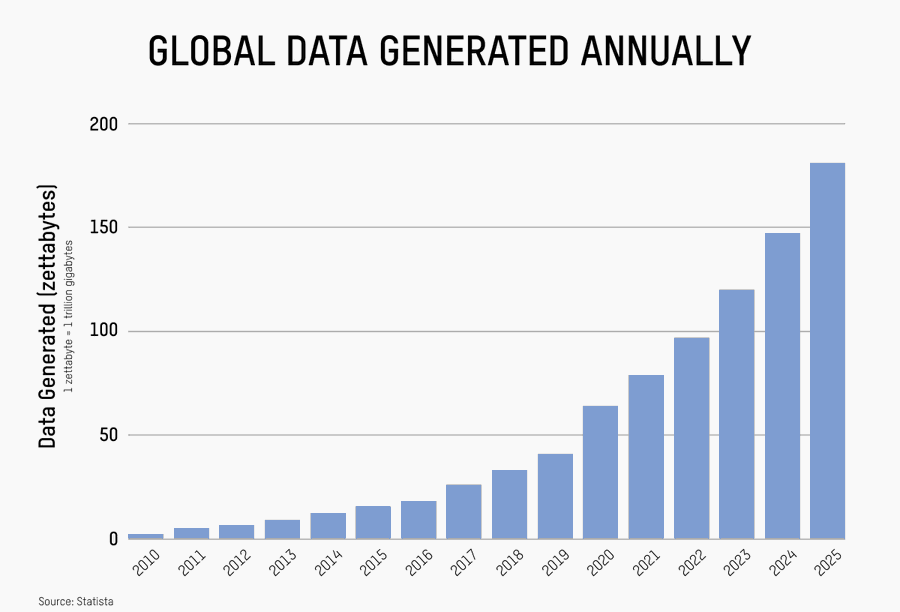

It has been 20 years since Clive Humby coined the phrase "data is the new oil", and companies have been taking strong steps to hoard and monetize user data. Users have realized that their data is the cornerstone of these multi-billion dollar companies, but they have little control over how their data is used and cannot share in the profits created by the data. With the rapid development of powerful AI models, this contradiction has become more prominent.

The opportunities facing data markets are two-fold: one is to address the problem of user data exploitation, and the other is to address the problem of data supply shortage, as larger and better models are consuming the easily accessible "oil field" of public Internet data and require new sources of data.

Data power returns to users

The question of how to leverage decentralized infrastructure to return data power to users is a vast design space that requires innovative solutions in multiple areas. Some of the most pressing questions include:

- Where data is stored and how to protect privacy during storage, transmission, and computation;

- How to objectively assess, screen and measure data quality;

- What mechanisms are used for attribution and monetization (especially tracing value back to its source after inference);

- and what kind of orchestration or data retrieval systems are used across diverse model ecosystems.

Supply constraints

In terms of addressing supply constraints, the key is not to simply replicate Scale AI’s model with tokens, but to understand where we can build advantages when the technology is favorable, and how to build solutions with competitive advantages, whether in terms of scale, quality, or better incentive (and screening) mechanisms to create higher-value data products. Especially when most of the demand still comes from Web2 AI, thinking about how to combine smart contract execution mechanisms with traditional service level agreements (SLAs) and tools is an important area of research.

7. Decentralized computing

If data is an essential element of AI development and deployment, computing power is another key component. The traditional large-scale data center model, with its unique advantages in space, energy, and hardware, has largely dominated the development trajectory of deep learning and AI in the past few years. However, physical limitations and the development of open source technologies are challenging this pattern.

- The first phase (v1) of decentralized AI computing is essentially a remake of Web2 GPU cloud services, with no real advantages on the supply side (hardware or data centers) and limited organic demand.

- In the second phase (v2) , some outstanding teams built a complete technology stack based on heterogeneous high-performance computing (HPC) supply, demonstrated unique capabilities in scheduling, routing, and pricing, and developed proprietary features to attract demand and cope with profit compression, especially on the inference side. Teams also began to diverge in usage scenarios and market strategies, with some focusing on integrating compiler frameworks to achieve efficient inference routing across hardware, and others pioneering distributed model training frameworks on the computing networks they built.

The industry is even beginning to see the rise of the AI-Fi market, with innovative economic primitives emerging that transform computing power and GPUs into yield-generating assets, or leverage on-chain liquidity to provide data centers with alternative sources of funding for hardware purchases.

The main question here is to what extent decentralized AI will be developed and deployed on decentralized computing infrastructure, or whether, as in the storage area, the gap between ideals and actual needs will always remain, making it difficult for the concept to realize its full potential.

8. Calculation and accounting standards

In terms of the incentive mechanism of decentralized high-performance computing networks, a major challenge in coordinating heterogeneous computing resources is the lack of a unified computing accounting standard. AI models add multiple unique complexities to the output space of high-performance computing, including model variants, quantization schemes, and the level of randomness that can be adjusted through model temperature and sampling hyperparameters. In addition, AI hardware will also produce different output results due to differences in GPU architecture and CUDA version. These factors ultimately lead to the need to establish standards to regulate how models and computing markets measure their computing power in heterogeneous distributed systems.

Partly due to the lack of these standards, there have been multiple cases in the Web2 and Web3 space in 2024 where models and compute markets have failed to accurately account for the quality and quantity of their compute. This has resulted in users having to audit the true performance of these AI layers by running their own comparative model benchmarks and performing proof of work by rate-limiting the compute market.

Looking ahead to 2025, the intersection of cryptography and AI is expected to achieve breakthroughs in verifiability, making it easier to verify than traditional AI. For ordinary users, it is critical to be able to make fair comparisons of various aspects of a defined model or computing cluster output, which will help audit and evaluate system performance.

9. Probabilistic Privacy Primitives

In “The Promise and Challenges of Cryptocurrency and AI Applications,” Vitalik points out a unique challenge when connecting cryptocurrency and AI: '“In cryptography, open source is the only way to achieve true security, but in AI, the openness of models (and even their training data) greatly increases their risk of being attacked by adversarial machine learning.”

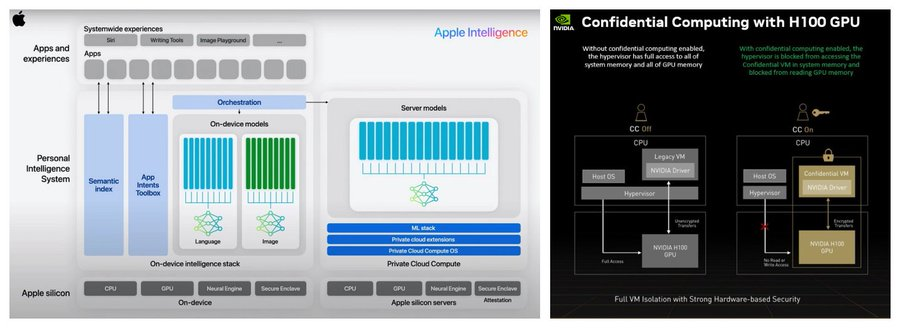

Although privacy is not a new area of blockchain research, the rapid development of AI is accelerating the research and application of privacy-supporting cryptographic primitives. Significant progress has been made in privacy-enhancing technologies in 2024, including zero-knowledge proofs (ZK), fully homomorphic encryption (FHE), trusted execution environments (TEEs), and multi-party computation (MPC), which are used for general application scenarios such as private shared states of encrypted data computation. At the same time, centralized AI giants such as NVIDIA and Apple are also using proprietary TEEs for federated learning and private AI reasoning, ensuring privacy while keeping hardware, firmware, and models consistent across systems.

Based on these developments, the industry is paying close attention to the progress of privacy maintenance techniques in random state transitions and how these techniques can accelerate the actual implementation of decentralized AI applications on heterogeneous systems. This includes multiple aspects from decentralized private reasoning to storage/access pipelines for encrypted data and fully sovereign execution environments.

Apple's AI technology stack and Nvidia's H100 GPU

10. Agent Intent and Next Generation User Transaction Interface

Over the past 12-16 months, there has been a lot of ambiguity about the definitions of intent, agent behavior, agent intent, solutions, agent solutions, etc., and how these concepts differ from traditional "robot" development in recent years is also unclear. AI agents autonomously conducting on-chain transactions is one of the application scenarios closest to implementation.

In the next 12 months, the industry expects to see more complex language systems combined with different data types and neural network architectures, pushing the overall design space forward. This raises several key questions:

- Will the agent use existing on-chain transaction systems, or develop its own tools and methods?

- Will big language models continue to serve as the backend for these proxy trading systems, or will entirely new systems emerge?

- At the interface level, will users begin to use natural language to conduct transactions?

- Will the classic "wallet as browser" concept finally come true?

The answers to these questions will profoundly affect the future direction of cryptocurrency trading. As AI technology advances, agent systems may become more intelligent and autonomous, better able to understand and execute user intent.