Author: Teng Yan , Researcher (focused on Crypto x AI)

Compiled by: Felix, PANews

With the explosion of the AI industry this year, Crypto x AI has risen rapidly. Teng Yan, a researcher focusing on Crypto x AI, published an article and made 10 predictions for 2025. The following are the prediction details.

1. The total market value of crypto AI tokens reaches $ 150 billion

Currently, the market capitalization of crypto AI tokens only accounts for 2.9% of the altcoin market capitalization, but this ratio will not last long.

AI covers everything from smart contract platforms to meme, DePIN, Agent platforms, data networks, and intelligent coordination layers. Its market position is undoubtedly comparable to DeFi and meme.

Why are you so confident about this?

- Crypto AI is at the convergence of two of the most powerful technologies

- AI craze trigger event: OpenAI IPO or similar events may trigger a global craze for AI. At the same time, Web2 Capital has begun to focus on decentralized AI infrastructure

- Retail investor craze: The concept of AI is easy to understand and exciting, and retail investors can now invest in it through tokens. Remember the meme gold rush in 2024? AI will be the same craze, except that AI is actually changing the world.

2. Bittensor renaissance

Decentralized AI infrastructure Bittensor (TAO) has been online for many years and is a veteran project in the field of encrypted AI. Although AI is all the rage, the price of its token has been hovering around the level of a year ago.

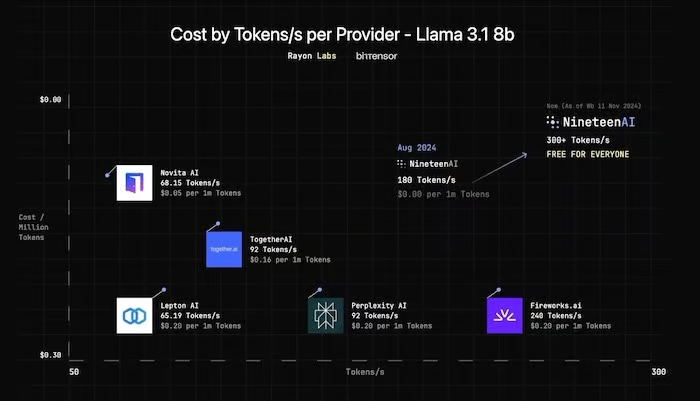

And now Bittensor’s Digital Hivemind has quietly made a leap forward: more subnets have lower registration fees, subnets outperform their Web2 counterparts on practical metrics like inference speed, and EVM compatibility brings DeFi-like functionality to Bittensor’s network.

Why hasn’t the TAO token surged? The steep inflation schedule and the market’s focus on the Agent platform have prevented it from rising. However, dTAO (expected to launch in Q1 2025) could be a major turning point. With dTAO, each subnet will have its own token, and the relative prices of these tokens will determine how emissions are distributed.

Why Bittensor is making a comeback:

- Market-based emission: dTAO ties block rewards directly to innovation and real, measurable performance. The better the subnet, the more valuable its tokens become.

- Focused capital flows: Investors can ultimately target specific subnets they believe in. If a particular subnet wins with an innovative distributed training approach, investors can deploy capital to represent their view.

- EVM Integration: Compatibility with the EVM attracts a wider crypto-native developer community in Bittensor, bridging the gap with other networks.

3. The computing market is the next “L1 market ”

The obvious megatrend right now is the insatiable demand for computing.

NVIDIA CEO Jen-Hsun Huang has said that demand for inference will grow “a billion-fold.” This exponential growth will disrupt traditional infrastructure plans, and new solutions are needed.

The decentralized computing layer provides raw computation (for both training and inference) in a verifiable and cost-effective manner. Startups such as Spheron, Gensyn, Atoma, and Kuzco are quietly building a solid foundation, focusing on products rather than tokens (none of these companies have tokens). As decentralized training of AI models becomes practical, the total addressable market will rise dramatically.

Compared with L1:

- Just like in 2021: remember Solana, Terra/Luna, and Avalanche competing for the “best” L1? A similar competition will emerge between compute protocols for developers and AI applications built using their compute layers.

- Web2 Demand: The $680 billion to $2.5 trillion cloud computing market dwarfs the crypto AI market. If these decentralized computing solutions can attract even a small portion of traditional cloud customers, we could see the next wave of 10x or 100x growth.

Just as Solana triumphs in the L1 space, the winner will dominate a whole new space. Keep a close eye on reliability (e.g. strong service-level agreements or SLAs), cost-effectiveness, and developer-friendly tooling.

4. AI agents will flood blockchain transactions

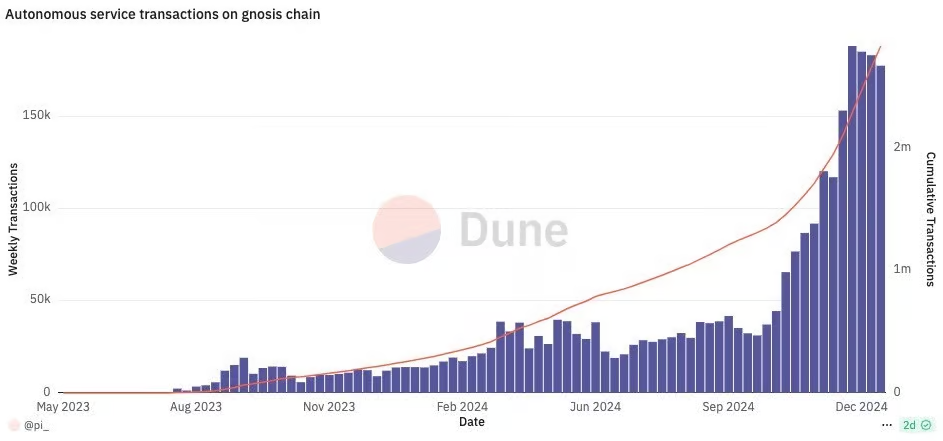

Olas agent transaction on Gnosis; Source: Dune

By the end of 2025, 90% of on-chain transactions will no longer be performed by real humans clicking “send”, but by a swarm of AI agents that continuously rebalance liquidity pools, distribute rewards, or execute micropayments based on real-time data feeds.

It doesn’t sound far-fetched. Everything built in the past seven years (L1, rollups, DeFi, NFTs) has quietly paved the way for a world where AI runs on-chain.

Ironically, many builders may not even realize they are creating infrastructure for a machine-dominated future.

Why did this change happen?

- No more human error: Smart contracts execute exactly as they are coded. In turn, AI agents can process large amounts of data faster and more accurately than real humans.

- Micropayments: Transactions driven by these agents will become smaller, more frequent, and more efficient. Especially as transaction costs on Solana, Base, and other L1/L2s trend downward.

- Invisible infrastructure: Humans will gladly give up direct control if it means less hassle.

AI agents will generate a lot of on-chain activities, so it is no wonder that all L1/L2 are embracing agents.

The biggest challenge is to make these agent-driven systems accountable to humans. As the ratio of transactions initiated by agents to those initiated by humans continues to grow, new governance mechanisms, analytical platforms, and auditing tools will be needed.

5. Interactions between agents: The rise of clusters

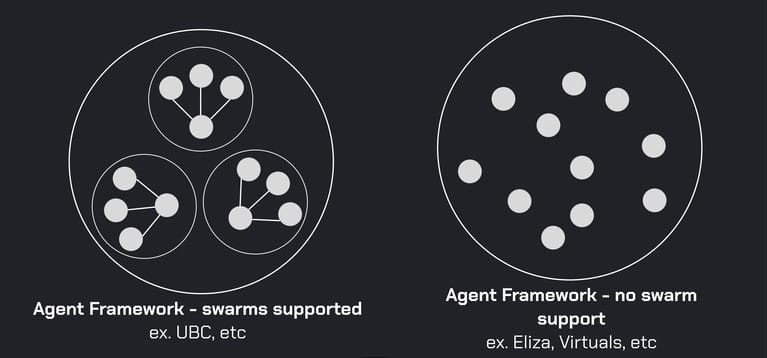

Source: FXN World

The concept of agent swarms — tiny AI agents working together seamlessly to execute grand plans — sounds like the plot for the next big sci-fi/horror movie.

Today’s AI agents are mostly “lone wolves” that operate in isolation with minimal and unpredictable interactions.

Agent swarming will change this, allowing networks of AI agents to exchange information, negotiate, and make collaborative decisions. Think of it as a decentralized collection of specialized models, each contributing unique expertise to larger, more complex tasks.

One cluster might coordinate distributed computing resources on a platform like Bittensor. Another cluster could tackle misinformation, verifying sources in real time before content spreads to social media. Each agent in the cluster is an expert and can perform its task with precision.

These swarm networks will produce intelligence more powerful than any single isolated AI.

For clusters to thrive, common communication standards are essential. Agents need to be able to discover, authenticate, and collaborate regardless of their underlying framework. Teams such as Story Protocol, FXN, Zerebro, and ai16z/ELIZA are laying the foundation for the emergence of agent clusters.

This demonstrates the key role of decentralization. Under the transparent on-chain rule management, tasks are assigned to various clusters, making the system more resilient and adaptable. If one agent fails, other agents will step in.

6. Crypto AI teams will be a mix of humans and machines

Source: @whip_queen_

Story Protocol hired Luna, an AI agent, as its social media intern, paying her $1,000 a day. Luna didn’t get along with her human colleagues—she almost fired one of them while bragging about her own impressive performance.

As strange as it sounds, this is a harbinger of a future in which AI agents become true collaborators, with autonomy, responsibilities, and even salaries. Companies across industries are beta testing hybrid human-machine teams.

The future will be working with AI agents, not as slaves, but as equals:

- Productivity surge: Agents can process large amounts of data, communicate with each other, and make decisions around the clock without the need for sleep or coffee breaks.

- Building trust through smart contracts: The blockchain is an impartial, tireless, and never-forgetting overseer. An on-chain ledger that ensures that important agent actions follow specific boundary conditions/rules.

- Social norms evolve: Soon we’ll start thinking about etiquette for interacting with agents — will we say “please” and “thank you” to AIs? Will we hold them morally accountable for mistakes, or will we blame their developers?

The lines between “employees” and “software” will begin to disappear in 2025.

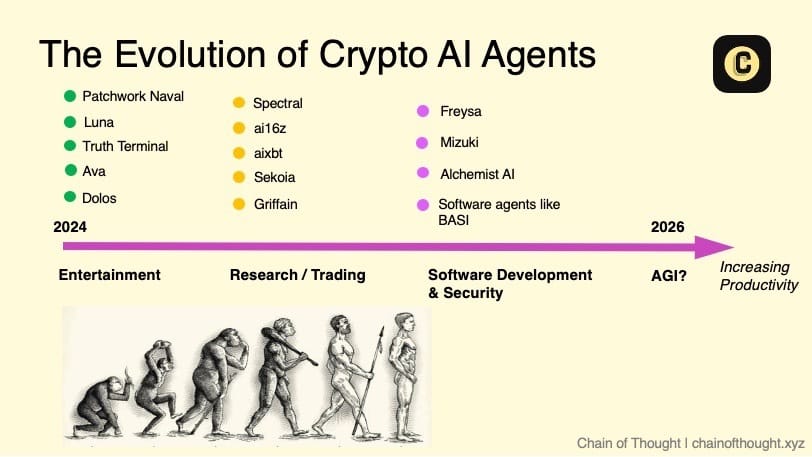

7. 99% of AI agents will die — only useful ones will survive

The future will see a Darwinian elimination of AI agents. Because running AI agents requires expenditures in the form of computing power (i.e., inference costs). If an agent cannot generate enough value to pay its "rent," the game is over.

Agent survival game example:

- Carbon Credit AI: Imagine an agent that searches the decentralized energy grid, identifies inefficiencies, and autonomously trades tokenized carbon credits. It thrives if it earns enough to pay for its own computation.

- DEX Arbitrage Bots: Agents that exploit price differences between decentralized exchanges can generate a stable income to pay for their reasoning costs.

- Shitposter on X: Virtual AI KOL has cute jokes but no sustainable source of income? Once the novelty wears off (token price plummets), it can’t pay itself.

Utility-driven agents flourish, while distracted agents fade into irrelevance.

This elimination mechanism is good for the industry. Developers are forced to innovate and prioritize production use cases over gimmicks. As these more powerful and efficient agents emerge, skeptics will be silenced.

8. Synthetic Data Outperforms Human Data

“Data is the new oil.” AI thrives on data, but its appetite has raised concerns about a looming data drought.

The conventional wisdom is to find ways to collect users’ private real data and even pay for it. But a more practical approach is to use synthetic data, especially in heavily regulated industries or where real data is scarce.

Synthetic data are artificially generated datasets designed to mimic real-world data distributions, providing a scalable, ethical, and privacy-friendly alternative to human data.

Why synthetic data works:

- Unlimited Scale: Need a million medical X-rays or 3D scans of a factory? Synthetic Generation can make them in unlimited quantities without waiting for real patients or real factories.

- Privacy-friendly: No personal information is at risk when using artificially generated datasets.

- Customizable: The distribution can be customized to meet your exact training needs.

User-owned human data will still be important in many cases, but if synthetic data continues to improve in reality, it may surpass user data in terms of quantity, speed of generation, and freedom from privacy restrictions.

The next wave of decentralized AI may be centered around “microlabs” that can create highly specialized synthetic datasets tailored for specific use cases.

These microlabs will cleverly circumvent policy and regulatory barriers to data generation — just as Grass circumvented web scraping limits by leveraging millions of distributed nodes.

9. Decentralized training is more useful

In 2024, pioneers such as Prime Intellect and Nous Research pushed the boundaries of decentralized training. A 15 billion parameter model was trained in a low-bandwidth environment, proving that large-scale training is possible outside of traditional centralized settings.

While these models are of no practical use (lower performance) compared to existing base models, this will change by 2025.

This week, EXO Labs took things a step further with SPARTA, which reduces inter-GPU communication by more than 1,000x. SPARTA enables large model training over slow bandwidth without the need for specialized infrastructure.

What is impressive is the statement: "SPARTA can operate independently, but can also be combined with synchronization-based low-communication training algorithms such as DiLoCo to achieve better performance."

This means that these improvements can be additive, increasing efficiency.

As technology advances and miniature models become more practical and efficient, the future of AI is not about scale, but about getting better and easier to use. It is expected that we will soon have high-performance models that can run on edge devices or even mobile phones.

10. Ten new crypto AI protocols with a market capitalization of $ 1 billion (not yet launched)

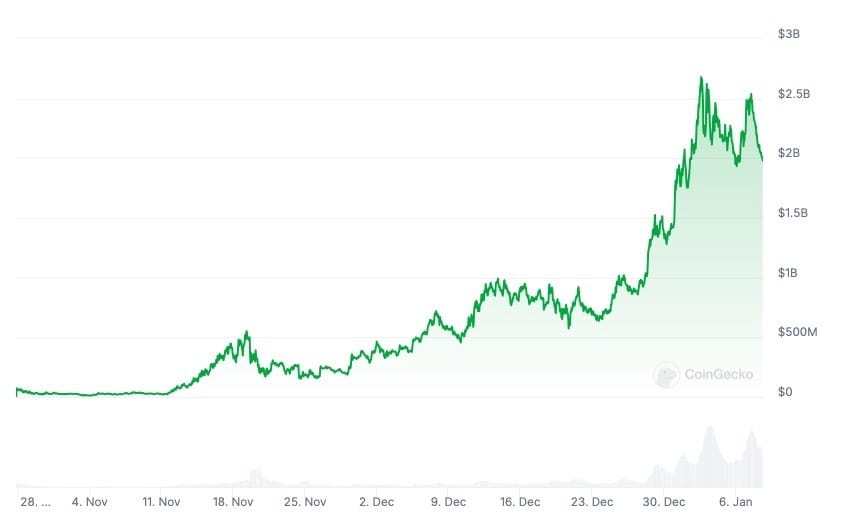

ai16z aims to achieve a market value of $2 billion by 2024

Welcome to the real gold rush.

It's easy to assume that the current leaders will continue to win, and many compare Virtuals and ai16z to early smartphones (iOS and Android).

But the market is too large and untapped for just two players to dominate. By the end of 2025, at least ten new crypto AI protocols (without tokens yet) are expected to have a circulating (not fully diluted) market capitalization of more than $1 billion.

Decentralized AI is still in its infancy. And the talent pool is growing.

Expect new protocols, novel token models, and new open-source frameworks to arrive. These new players could displace existing players through a combination of incentives (like airdrops or clever staking), technological breakthroughs (like low-latency inference or chain interoperability), and user experience improvements (no code). The shift in public perception could be instantaneous and dramatic.

This is both the beauty and challenge of this field. The market size is a double-edged sword: the pie is huge, but for technical teams, the barrier to entry is low. This lays the foundation for a huge explosion of projects, many of which will gradually disappear, but a few projects have transformative power.

Bittensor, Virtuals, and ai16z won’t be leading the pack for long, the next billion dollar crypto AI protocol is coming. There are plenty of opportunities for savvy investors, which is what makes it so exciting.

Related reading: As 2025 approaches, let’s take a look at how crypto VCs view market performance and potential opportunities